Visibility Isn’t Access: How Platforms Erase Disabled Creators

By Syanne Centeno for Repro Uncensored

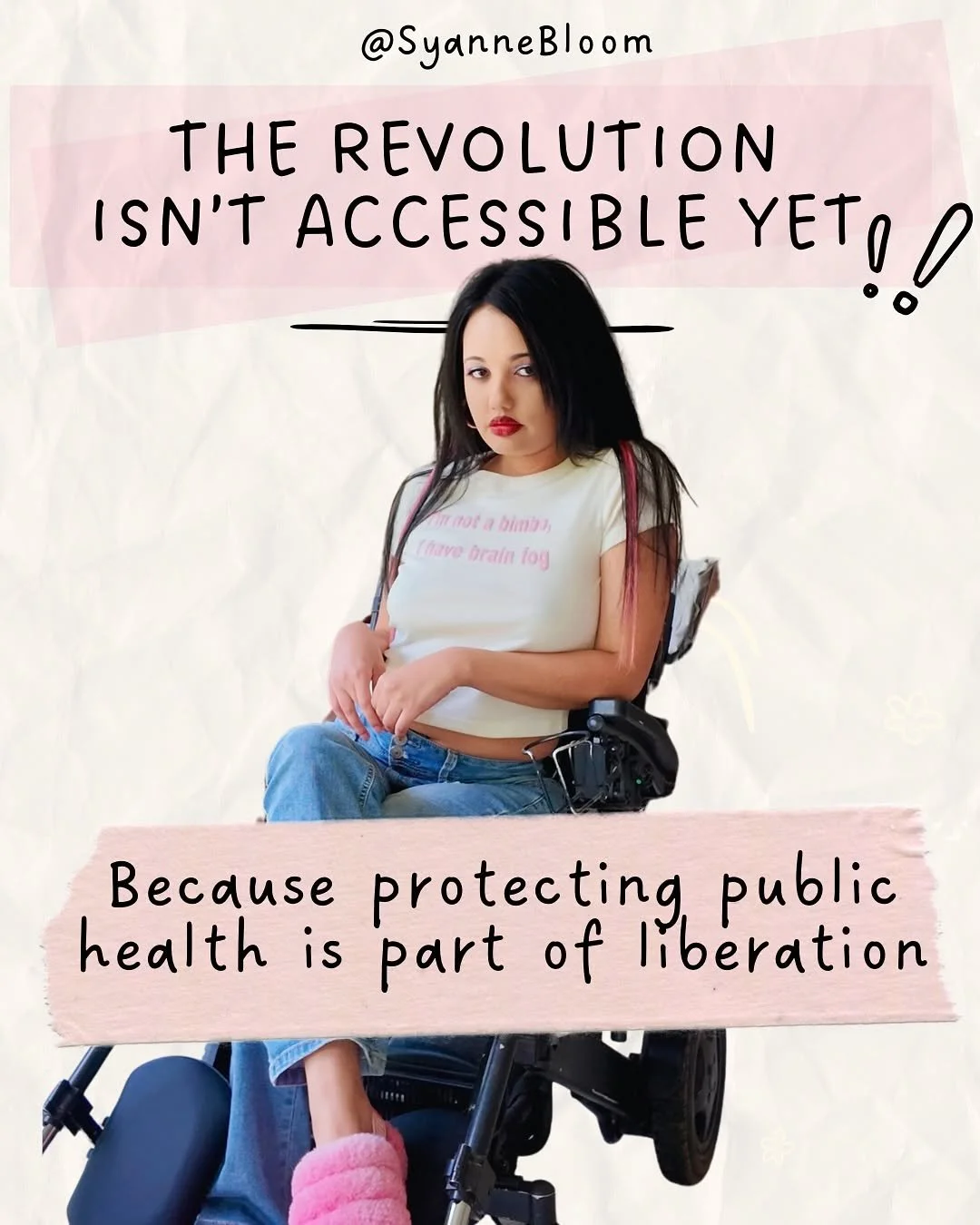

I navigate social platforms with the constant awareness that ableism is only a few scrolls away. My work is shaped by my lived experience as a disabled woman of the global majority. Being a disabled creator means living at the intersection of visibility and erasure.

Online platforms are built around speed, constant output, and algorithmic reward systems. None of these structures are designed for disabled people. My disability shapes how I pace myself, how I protect my health, and how I communicate. I understand power, exclusion, and systemic bias not as abstract concepts, but as realities I have had to survive. I use my platforms to challenge ableism and educate, while also navigating systems that routinely penalize creators who speak about disability, race, or injustice. My presence online becomes both resistance and reclamation.

Platforms Built Without Disabled Creators in Mind

Accessibility gaps appear at every stage of creating and sharing content. Platforms are not built with disabled creators in mind, and their algorithms often create invisible barriers. Content about disability, illness, race, or injustice is frequently deprioritized, demonetized, or flagged, forcing disabled creators to work harder simply to be seen.

The culture of online spaces is itself an accessibility barrier. There is an expectation of constant output and instant response that is incompatible with chronic illness, pain, and medical emergencies. Digital spaces mirror the physical world in their failure to accommodate disabled bodies.

Censorship of Disabled and Reproductive Narratives

I have experienced repeated platform suppression connected to disability and reproductive justice. Recently, Instagram removed the audio from multiple videos in which I discussed abortion access and my own experience with an ectopic pregnancy. None of these posts violated platform policies. Yet because they addressed disabled bodies, pregnancy loss, and reproductive healthcare, they were flagged and muted. This kind of censorship is routine.

How Platform Systems Reinforce Ableism

Platform systems reinforce ableism through both design and enforcement. They assume nonstop productivity and perfect health, which excludes disabled creators by default. Algorithmically, disability content, especially when it addresses illness, pain, or reproductive justice, is buried or suppressed. At the same time, openly ableist harassment often goes unaddressed. Disabled narratives are punished, while those who attempt to silence us are protected.

Fashion, Social Media, and the Same Logic of Exclusion

These patterns of exclusion are mirrored across both fashion spaces and social media. Both industries reward proximity to whiteness, thinness, wealth, and able-bodied ideals, and punish those who fall outside them. Disabled people are either hidden entirely or tokenized for profit.

Online, marginalized creators are copied, erased, or sidelined, while others are elevated for imitating our work. When disabled women of color speak honestly about our experiences, we are labeled as difficult. Visibility is reserved for the privileged, while the rest of us are expected to be grateful for whatever limited representation we are allowed.

Harassment, Policing, and Platform Neglect

Disabled creators also face sustained harassment online. This includes ableist slurs, accusations of faking disability, attacks on intelligence, and the policing of our bodies and medical trauma. When we speak about reproductive justice or medical neglect, the hostility intensifies. Platforms routinely fail to intervene, effectively protecting abusers while penalizing those who are harmed.

When Algorithms Reward Image but Punish Critique

Algorithms amplify certain parts of my work while suppressing others. My modeling is rewarded. My scholarship and political analysis are not. The platform benefits from my image but resists my critique. I have learned how to navigate and outmaneuver these systems, but survival should not require constant strategic adaptation.

Community Care as Collective Survival

Community care among disabled creators is an act of collective survival. We amplify one another when algorithms suppress our work, share resources when platforms censor our medical realities, and intervene when someone is targeted or silenced. Disabled creators do what platforms refuse to do: protect one another and ensure our stories are not erased.

What a Safer Digital Space Would Look Like

A safer and more accessible digital space would be one where disabled people are not muted, flagged, or punished for speaking about our bodies, our health, or our politics. It would mean an end to algorithmic penalties for disabled existence. That is not a radical demand. It is the bare minimum. And people need to start captioning their videos.